Notes of lectures by D. Silver.

Markov Decision Process

Notes of lectures by D. Silver.A brief introduction of MDPs.

Introduction to Reinforcement Learning

Notes of lectures by D. Silver. A brief introduction of RL.

A Review of Probability

A brief introduction of the probability theory and the information theory.

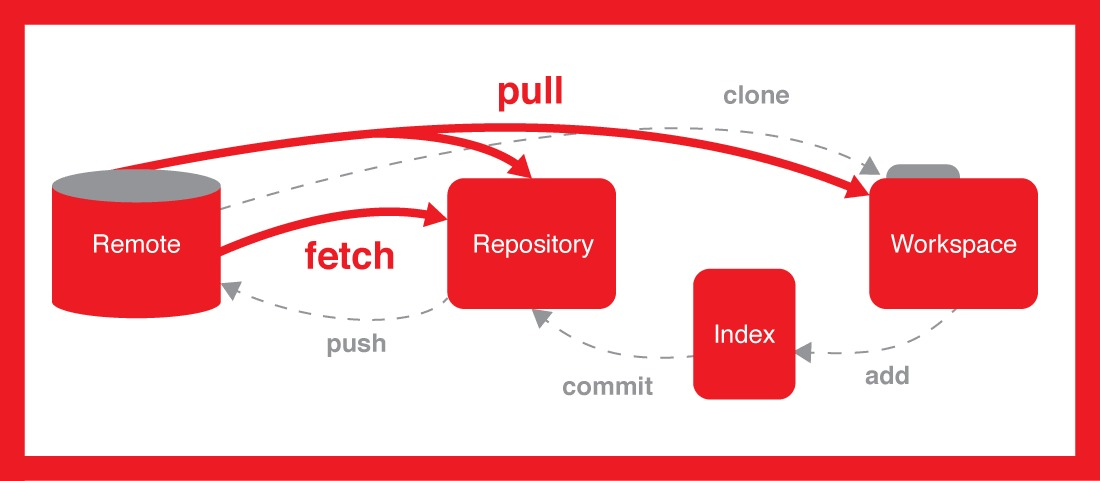

Tricks of Git

Machine Learning Basics

A short introduction about machine learning.

Tricks of Latex

Hints on LaTeX.

An Overview of Ensemble Learning

Ensemble learning combines multiple weak learners to build a strong learner.

Attention in a Nutshell

Attention mechanism has been widely applied in natural langugage processing and computer vision tasks.

Shell Command Zoo in Data Processing

Bash scripts are powerful in data processing. This post records some tips that I encountered during the data processing, and will keep updated.